Modern artificial intelligence systems rely on a critical milestone where algorithms stop improving through training. This pivotal moment, central to developing reliable neural networks, occurs when predictions stabilise within acceptable error margins.

Engineers and data scientists monitor this process closely. It determines whether models achieve sufficient accuracy for real-world deployment. When loss functions reach minimal thresholds, further iterations yield diminishing returns.

The principle differs fundamentally from traditional software development. Rather than explicitly coding solutions, systems learn patterns through repeated exposure to data. This approach revolutionises sectors from medical diagnostics to autonomous vehicles.

Understanding this phenomenon helps practitioners optimise training efficiency. It prevents wasted computational resources while ensuring models generalise effectively beyond training datasets. Applications span computer vision, speech recognition, and predictive analytics.

Our guide explores the mathematical foundations and practical implications of this process. Subsequent sections will analyse optimisation techniques, common pitfalls, and evaluation metrics essential for building robust AI solutions.

Introduction to Convergence in Deep Learning

The efficacy of machine learning models hinges on their ability to reach a state where error reduction plateaus effectively. This stabilisation of parameters determines whether neural networks can transition from theoretical constructs to practical tools.

Training processes involve iterative weight adjustments through backpropagation. As systems digest datasets, their predictive accuracy improves until further refinements yield negligible returns. Engineers monitor this progression using loss function metrics:

- Error rates stabilise within predefined thresholds

- Weight matrices cease significant fluctuations

- Validation scores align with training performance

Different architectures exhibit unique convergence patterns. Convolutional networks handling image data, for instance, often require longer training cycles than feedforward models processing structured inputs. This variation underscores the importance of optimisation strategies in neural networks.

“Proper convergence acts as the bridge between algorithmic potential and real-world reliability”

Practical implementations balance mathematical guarantees with computational realities. While theory suggests ideal stopping points, practitioners must account for hardware limitations and dataset complexities. These considerations shape deployment decisions across sectors from fintech to healthcare diagnostics.

Understanding the Concept of Convergence in AI

The journey of artificial intelligence systems from raw data to reliable predictions hinges on a fundamental process. This stabilisation point determines whether models can transform inputs into actionable insights across industries.

Defining Stabilisation in Machine Systems

Optimisation methods like gradient descent drive parameter adjustments in computational models. These iterative updates gradually reduce discrepancies between predictions and actual outcomes. Training ceases when error margins meet predefined thresholds, signalling stable performance.

Three critical indicators mark this stabilisation:

- Loss values plateau across successive epochs

- Gradient magnitudes approach near-zero values

- Validation metrics align with training benchmarks

Architectural Impact on Pattern Recognition

Complex structures process information through layered transformations. Recurrent designs handling sequential data exhibit different stabilisation patterns compared to convolutional systems analysing spatial relationships. Parameter tuning becomes crucial for balancing accuracy with computational efficiency.

“Effective stabilisation turns theoretical models into practical tools for decision-making”

Practitioners monitor training curves to identify optimal stopping points. Early termination risks underdeveloped capabilities, while prolonged training wastes resources. Modern frameworks incorporate automated checks to balance these factors in applications ranging from fraud detection to genomic analysis.

Explaining what is convergence in deep learning

Neural architectures achieve reliable performance when training processes reach equilibrium states. This occurs as systems digest patterns from data, adjusting parameters until further changes yield negligible improvements. Practitioners track this progression through loss function behaviour – consistent decreases signal effective adaptation.

Key Terminologies and Definitions

The optimisation landscape features critical landmarks influencing training outcomes. Local minima represent temporary solution plateaus where gradient descent might stall. True success lies in reaching global minima – optimal parameter configurations delivering peak accuracy. Between these points, saddle areas often challenge algorithms with deceptive gradient signals.

Three primary stabilisation types govern system development:

- Parameter alignment: Weight matrices cease significant fluctuations

- Functional consistency: Output patterns stabilise across inputs

- Performance plateaus: Validation metrics match training benchmarks

“Recognising true stabilisation separates functional models from experimental curiosities”

Training duration directly impacts generalisation capabilities. Systems halted prematurely risk underdeveloped pattern recognition, while overprocessed models waste resources. Modern frameworks employ automated checks comparing epoch-by-epoch metric variations against predefined thresholds.

Effective monitoring combines quantitative analysis with architectural understanding. Convolutional networks processing visual data, for instance, exhibit different stabilisation timelines than transformer models handling language tasks. This knowledge informs decisions about tuning hyperparameters or expanding training datasets.

Mathematical Foundations and Algorithmic Insights

Training neural networks resembles navigating a multidimensional terrain where algorithms seek optimal pathways. This process relies on mathematical principles that govern how systems refine their predictive capabilities through iterative adjustments.

Gradient Descent and the Error Surface

The gradient descent algorithm operates like a hiker descending valleys in foggy conditions. It calculates directional steps using slope information from the error surface – a geometric representation of prediction inaccuracies across parameter combinations. Each iteration adjusts weights to minimise discrepancies between actual and expected outputs.

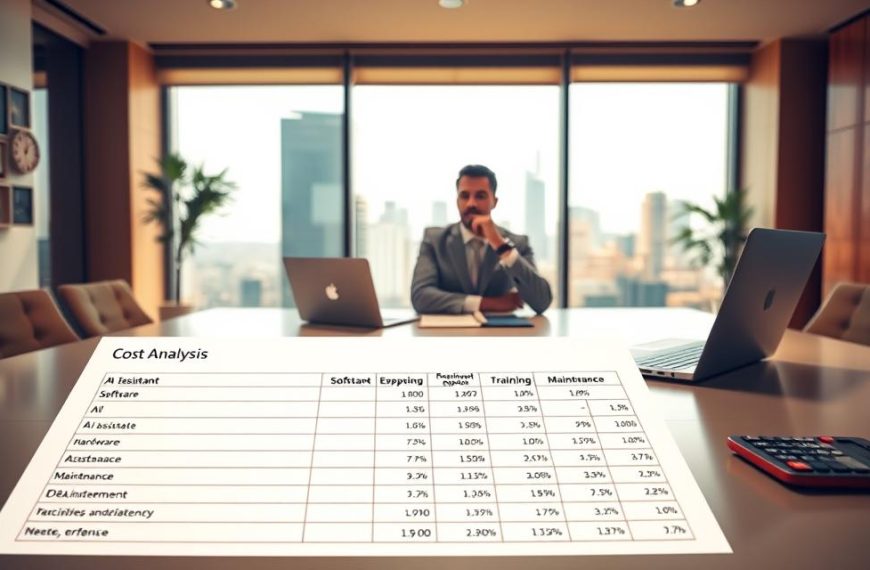

| Method | Convergence Speed | Memory Usage |

|---|---|---|

| Stochastic GD | Fast fluctuations | Low |

| Mini-batch GD | Balanced | Moderate |

| Adam Optimiser | Adaptive pacing | High |

Advanced techniques like RMSprop and Adam introduce momentum concepts. These approaches automatically adjust step sizes based on historical gradient data, preventing oscillations around ravines in the error surface.

Hyperparameter Optimisation

Selecting optimal configurations requires balancing exploration and exploitation. Key parameters include:

- Learning rate magnitude

- Batch size proportions

- Regularisation strength

“Tuning hyperparameters transforms theoretical models into production-ready solutions” – Machine Learning Researcher

Automated systems now employ Bayesian optimisation to navigate this complex search space efficiently. These tools evaluate multiple configurations simultaneously, dramatically reducing trial-and-error phases in model development.

Convergence in Neural Networks and the Perceptron Theorem

Frank Rosenblatt’s 1957 breakthrough established mathematical guarantees for pattern recognition systems. His perceptron convergence theorem remains fundamental to understanding how simple neural networks achieve stable solutions through iterative adjustments.

Overview of the Perceptron Convergence Theorem

The theorem proves that linear separability ensures algorithmic success. When training data forms distinct clusters separable by a hyperplane, the perceptron algorithm adjusts weights until achieving perfect classification. Three conditions govern this process:

- Feature vectors must be linearly divisible

- Learning rates decrease appropriately over time

- Weight updates follow mistake-driven adjustments

“Rosenblatt’s work demonstrated that machines could learn decision boundaries without explicit programming”

Implementations in Neural Network Training

Modern architectures build upon these principles through layered adaptations. While single-layer perceptrons handle basic classification, multi-layer systems address non-linear patterns through backpropagation. Key implementations include:

| Architecture | Data Type | Convergence Check |

|---|---|---|

| Single-layer | Linear | Error-free epochs |

| Multi-layer | Non-linear | Gradient stabilisation |

Practical applications range from spam filters to medical diagnostics. Developers often combine perceptron-inspired algorithms with stochastic gradient descent for enhanced efficiency. These hybrid approaches maintain theoretical guarantees while handling real-world data complexities.

Challenges in Achieving Convergence

Developing robust neural architectures faces multiple roadblocks during training cycles. High-dimensional datasets with overlapping patterns often create complex optimisation landscapes where algorithms struggle to find stable solutions. These obstacles demand careful balancing of computational resources and model capabilities.

Navigating Data Complexity and Model Pitfalls

Noisy observations frequently distort parameter adjustments, causing erratic training behaviour. Consider smartphone sensor data collected in urban environments – inconsistent readings may lead models towards misleading patterns. Three critical issues emerge:

| Challenge | Impact | Solution |

|---|---|---|

| High-dimensional data | Slows parameter tuning | Feature selection |

| Imbalanced classes | Skews decision boundaries | Resampling techniques |

| Insufficient samples | Limits pattern recognition | Data augmentation |

Overfitting remains a persistent threat, particularly with limited training examples. Models may achieve 95% accuracy on seen data while failing spectacularly with new inputs. Regularisation methods like dropout layers help maintain generalisation capabilities without sacrificing performance.

“The true test of convergence lies in a model’s ability to handle edge cases, not just textbook examples” – AI Research Lead, Cambridge University

Diagnostic tools prove essential for identifying unstable training processes. Practitioners monitor:

- Divergence between training/validation loss curves

- Sudden spikes in gradient magnitudes

- Plateauing accuracy metrics across epochs

Adaptive learning schedulers and early stopping protocols help mitigate these issues. By dynamically adjusting training parameters, systems can navigate complex datasets more effectively while conserving computational resources.

Role of Learning Rate and Optimisation Algorithms

Training efficiency in neural systems hinges on precise calibration of key parameters. The relationship between learning rates and optimisation methods determines whether models achieve stable solutions or spiral into computational chaos.

Impact of Learning Rate Adjustments

Excessive rates cause unstable weight updates. Models may overshoot optimal parameters, leading to erratic performance. Conversely, timid values prolong training cycles unnecessarily. Adaptive approaches dynamically adjust step sizes across epochs, balancing speed with stability.

Three critical factors govern rate selection:

- Network depth and layer complexity

- Batch size variations during training

- Activation function characteristics

“Selecting appropriate learning rates remains one of the most impactful decisions in model development” – Machine Learning Engineer, Imperial College London

Modern optimisation algorithms address these challenges through sophisticated mechanisms. Adam combines momentum tracking with per-parameter scaling, while RMSprop uses moving averages of squared gradients. These methods automatically adapt to error surface topography, often achieving faster stabilisation than basic gradient descent.

Practical implementations employ hybrid strategies. Initial phases might use aggressive rates for rapid progress, switching to conservative adjustments near potential minima. Automated hyperparameter tuning frameworks now integrate these principles, systematically exploring configurations that balance speed with reliability.

Practical Examples and Real-World Use Cases

Real-world implementations demonstrate how stabilised training processes enable advanced AI functionalities. From urban infrastructure to transport systems, these applications rely on precise pattern recognition honed through iterative refinement.

Image Recognition and Autonomous Vehicles

Visual processing systems achieve operational readiness when training metrics plateau reliably. Self-driving cars use stabilised models to distinguish pedestrians from street furniture across varied lighting conditions. Engineers validate these systems through millions of input data points, ensuring consistent performance before deployment.

Convolutional architectures power traffic sign recognition with 99% accuracy in controlled tests. This reliability stems from rigorous checks confirming parameter stability across diverse scenarios. Autonomous navigation depends on such validated models to make split-second decisions.

Smart Cities and IoT Applications

Municipal AI systems process real-time sensor streams to optimise energy usage and traffic flow. Stabilised learning ensures predictions remain accurate despite fluctuating input data from weather patterns or rush-hour congestion. Bristol’s smart grid project reduced peak demand by 15% using these principles.

IoT networks leverage converged models for predictive maintenance in transport networks. Vibration sensors on bridges analyse input data through neural networks trained to detect structural anomalies. This approach prevents failures while extending infrastructure lifespan through timely interventions.

FAQ

How does convergence relate to neural network training?

During neural network training, convergence refers to the process where iterative adjustments to weights and biases minimise the error function. This ensures the model’s predictions align closely with training data, achieving stable performance without overfitting.

Why is learning rate critical for optimisation algorithms?

The learning rate controls step sizes during gradient descent. Adjustments ensure efficient traversal of the error surface—too high risks overshooting optimal points, while too low prolongs training. Frameworks like TensorFlow or PyTorch offer adaptive rate mechanisms.

What challenges arise when handling noisy data in machine learning?

Noisy data complicates convergence by introducing local minima on the error surface. Techniques like dropout layers or data augmentation improve generalisation, ensuring models avoid memorising irrelevant patterns in the training set.

How do hyperparameters influence model performance?

Hyperparameters, such as batch size or activation functions, dictate how algorithms navigate the solution space. Poor choices lead to divergence or slow convergence. Tools like Google’s Vertex AI automate tuning for balanced speed and accuracy.

Can convergence guarantees apply to real-world AI applications?

Yes. Autonomous vehicles use convolutional networks with proven convergence theorems for object detection. Similarly, IoT systems in smart cities rely on recurrent networks that balance training efficiency with real-time data processing demands.

What role does the perceptron theorem play in modern AI?

The perceptron convergence theorem assures linear separability in classification tasks. Modern implementations, like SVM or logistic regression, extend this principle, ensuring algorithms like stochastic gradient descent reliably find optimal decision boundaries.