Modern computational linguistics has undergone radical changes since the rise of neural network architectures. These systems now interpret human communication with remarkable precision, raising questions about how natural language processing aligns with broader technological frameworks.

Traditional approaches relied on rigid rules and manual feature engineering. Today, multi-layered algorithms analyse textual patterns autonomously, mimicking cognitive processes. This shift stems from advancements in machine learning subfields that prioritise data-driven pattern recognition.

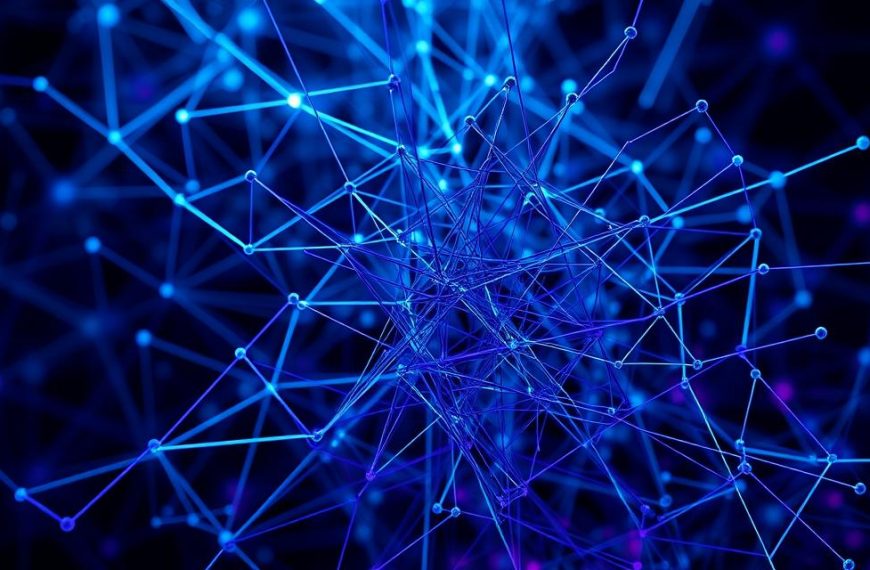

Central to this evolution are artificial neural networks – digital structures that process information through interconnected nodes. Their ability to self-optimise makes them particularly effective for linguistic tasks, from sentiment analysis to real-time translation systems.

Contemporary methods employ diverse architectures like convolutional networks and transformer models. These tools decode semantic relationships and contextual nuances without human intervention, fundamentally altering how machines engage with language.

The interplay between language technologies and advanced algorithms defies simple categorisation. Subsequent sections will dissect whether this connection represents hierarchical inclusion or symbiotic collaboration.

Introduction to NLP and Deep Learning

The fusion of linguistic analysis and advanced algorithms drives today’s most sophisticated artificial intelligence systems. Natural language processing combines computational principles with statistical techniques, allowing machines to interpret text and speech effectively. Early methods depended on manually crafted rules, but modern implementations leverage self-improving neural architectures.

Traditional approaches required extensive human input to define grammatical structures. Current frameworks utilise multi-layered networks and machine learning techniques that autonomously identify patterns across massive datasets. This shift has enabled breakthroughs like generative AI models capable of producing human-like responses.

Practical applications demonstrate this synergy clearly. Virtual assistants process voice commands using recurrent neural networks, while translation services employ transformer models to capture contextual nuances. These innovations highlight how deep learning methodologies underpin contemporary language technologies.

Overview of Natural Language Processing

Machines deciphering human speech represents one of artificial intelligence’s most complex challenges. This field merges computational linguistics with machine learning algorithms, enabling systems to parse syntax and interpret context. At its core, it transforms unstructured data into actionable insights through layered analytical processes.

Definition and Key Concepts

Language analysis operates through two pillars: syntactical and semantical evaluation. The former dissects sentence structures using grammatical rules, while the latter extracts contextual meaning. For example, tokenisation breaks text into manageable units, while named entity recognition identifies proper nouns like locations or organisations.

Advanced systems employ sentiment analysis to gauge emotional tones in communications. As noted by researchers, “The true power lies in combining these techniques to mirror human comprehension.” Such integration allows chatbots to deliver coherent responses or translation tools to preserve idiomatic nuances.

Applications in Industry

From healthcare to finance, automated language processing techniques streamline operations. Medical teams use it to analyse patient records, identifying trends in symptoms. Legal firms deploy contract-review algorithms that highlight critical clauses in seconds.

Customer service sectors benefit through AI-driven chatbots resolving queries 24/7. Financial institutions automate document processing, reducing manual errors in loan approvals. These implementations showcase how natural language technologies enhance efficiency across diverse sectors.

The Evolution of Deep Learning for NLP

Language technology has transformed dramatically across three distinct phases. Early systems depended on rigid rule-based frameworks, where programmers manually coded grammatical patterns. These if-then structures struggled with ambiguity, often requiring constant updates to handle exceptions.

Statistical methods emerged next, introducing probabilistic approaches to language analysis. Algorithms began mapping words to numerical vectors, enabling spellcheckers and predictive text. This shift allowed machine learning techniques to identify patterns in documents without exhaustive human input.

The current era revolves around multi-layered neural architectures. Key innovations include:

- Word embeddings capturing semantic relationships

- Recurrent networks processing sequential data

- Transformer models prioritising contextual relevance

These advancements eliminated manual feature engineering. Modern systems now ingest raw text and voice data, self-optimising through exposure to billions of examples. As one Google engineer noted,

“The ability to learn from unstructured information fundamentally changed what machines understand about human communication.”

Breakthrough architectures like BERT and GPT demonstrate how deep learning achieves unprecedented accuracy. They decode idioms, sarcasm, and cultural references – tasks once deemed impossible for artificial systems. This progression underscores the symbiotic relationship between linguistic research and computational innovation.

Understanding Deep Learning Models and Architectures

Neural architectures powering language technologies rely on specialised designs for optimal performance. Two dominant frameworks – convolutional and recurrent networks – demonstrate distinct approaches to handling textual information. Their structural differences dictate how systems analyse patterns in documents or conversations.

Convolutional Neural Networks (CNNs) in NLP

Originally crafted for visual recognition, these neural networks now excel at detecting localised patterns in text. Convolutional layers scan documents using filters that identify n-gram features – word combinations revealing sentiment or topic. Max-pooling operations then highlight dominant linguistic characteristics across sentences.

This architecture proves particularly effective for:

- Parallel processing of non-sequential data

- Classifying emails or social media posts

- Identifying key phrases in legal contracts

Recurrent Neural Networks (RNNs) for Sequence Data

Designed for temporal dependencies, RNNs process words sequentially while maintaining hidden states. These memory units track contextual relationships between terms, enabling coherent sentence generation. Long Short-Term Memory (LSTM) variants address gradient issues through gated mechanisms controlling information flow.

| Architecture | Strengths | Common Uses |

|---|---|---|

| CNNs | Rapid feature extraction | Text categorisation |

| RNNs | Context preservation | Speech recognition |

| LSTMs | Long-range dependencies | Machine translation |

As one MIT researcher observes:

“Choosing between these frameworks depends on whether your priority lies in speed or contextual depth.”

Hybrid approaches often combine both architectures to balance efficiency with nuanced analysis.

is nlp a part of deep learning

Artificial intelligence frameworks resemble interconnected ecosystems rather than rigid hierarchies. While natural language processing draws heavily from neural architectures, it operates as a specialised discipline within the broader AI landscape. This relationship becomes clearer when examining how linguistic systems combine multiple methodologies.

Contemporary language models blend statistical approaches with neural networks. Traditional techniques like syntax parsing coexist with transformer-based architectures, creating hybrid solutions. As a Stanford researcher notes:

“Effective communication analysis requires both linguistic rules and adaptive pattern recognition.”

Three key points clarify their connection:

- Neural networks enhance contextual understanding in translation tools

- Rule-based systems maintain grammatical accuracy in chatbots

- Statistical methods optimise sentiment analysis algorithms

These collaborations demonstrate how deep learning supplements rather than replaces established practices. Language technologies employ convolutional networks for text classification while retaining symbolic logic for semantic disambiguation.

The integration extends beyond technical implementation. Linguistic expertise guides neural architecture design, ensuring models account for idioms and cultural references. Meanwhile, self-improving algorithms handle data processing at scales impossible for manual coding.

This synergy explains why machine learning serves as a bridge between disciplines. It enables systems to learn from historical datasets while preserving domain-specific knowledge. The result? Advanced voice assistants that comprehend regional accents and legal document analysers that spot contractual nuances.

Decoding the Role of RNNs in Sequence-to-Sequence Tasks

Processing sequential information demands architectures that remember past inputs while predicting future outputs. Recurrent neural networks excel here through their cyclical connections, maintaining memory across time steps. This capability proves vital for converting one sequence into another – like transforming French sentences into English equivalents.

Machine Translation and Text Summarisation Explained

Translators convert entire phrases rather than individual words, requiring systems to grasp contextual relationships. Machine translation frameworks analyse source language structures before generating grammatically correct target outputs. For example, converting “Bonjour le monde” to “Hello world” involves understanding both vocabulary and syntax.

Text condensation follows similar principles. Summarisation models identify key points in lengthy documents through pattern recognition. They then reproduce concise versions without losing critical information – like distilling a 1,000-word report into 100-word highlights.

Encoder-Decoder Architectures in RNNs

These dual-network systems process variable-length data through two phases. Encoders compress input sequences into fixed-dimensional context vectors, capturing semantic essence. Decoders then unpack these vectors step-by-step, generating coherent outputs.

Key advantages include:

- Handling mismatched input-output lengths

- Preserving meaning across translations

- Adapting to different text formats

As noted by a Cambridge researcher:

“The true innovation lies in bridging comprehension with generation through mathematical representations.”

Modern implementations use attention mechanisms to refine outputs. These components let decoders focus on relevant input sections during each generation step, improving accuracy in complex sequence tasks.

A Detailed Look at Transformer Models for NLP

Revolutionising how machines process language, transformer models bypass sequential limitations through parallel computation. Unlike recurrent architectures, these frameworks analyse entire sentences simultaneously. This approach accelerates training while capturing nuanced relationships between distant words.

Self-Attention Mechanism and Multi-Headed Attention

The self-attention process calculates relevance scores between words. Each term receives weighted values indicating its influence on others within the sentence. For example, in “The bank charges interest rates,” the system recognises “bank” relates more to “interest” than “charges” when discussing finance.

Multi-headed attention expands this capability through parallel computation layers. Eight or more attention heads operate simultaneously, each focusing on distinct linguistic aspects:

- Syntactic roles (nouns vs verbs)

- Semantic connections (synonyms)

- Contextual dependencies (idioms)

| Feature | Transformers | RNNs |

|---|---|---|

| Processing | Parallel | Sequential |

| Training Speed | 3x Faster | Base Rate |

| Context Window | 512+ tokens | ~100 tokens |

As a Google DeepMind researcher observes:

“Self-attention mirrors how humans prioritise information – weighing relevance rather than processing order.”

These architectures enable language models to handle massive datasets efficiently. Their scalability supports training on billions of parameters, achieving unprecedented accuracy in translation and text generation tasks.

Practical Implementations of CNNs for NLP Tasks

Pattern recognition through convolutional frameworks reshapes how systems handle textual data. These architectures excel in text classification, particularly for analysing customer reviews or social media content. Their layered approach processes linguistic patterns similarly to image recognition – detecting localised features within sentences.

For sentiment analysis, a typical CNN structure begins with embedding layers converting words into numerical vectors. Convolutional filters then scan these representations, identifying phrases indicating positive or negative tones. Max-pooling layers highlight dominant emotional markers before fully connected layers make final predictions.

Spam detection systems employ similar principles. Networks classify emails by recognising suspicious word combinations and structural anomalies. This method achieves higher accuracy than rule-based filters through continuous learning from evolving phishing tactics.

Key advantages include:

- Rapid processing of large document batches

- Effective handling of short-form content

- Adaptability across multiple languages

These implementations demonstrate how convolutional models address real-world challenges. From screening support tickets to moderating forums, they enhance automated decision-making while reducing manual oversight.

FAQ

How does natural language processing relate to deep learning?

Natural language processing (NLP) leverages deep learning models like recurrent neural networks and transformer architectures to analyse text data. While NLP existed before these frameworks, modern techniques rely heavily on neural networks for tasks like sentiment analysis or machine translation.

What makes recurrent neural networks suitable for language tasks?

Recurrent neural networks (RNNs) process sequential data—such as sentences—by retaining context over time. This makes them ideal for text classification, speech recognition, or generating contextual responses in chatbots. Architectures like LSTM or GRU address challenges like vanishing gradients.

Why are transformer models dominant in modern NLP?

Transformer models use self-attention mechanisms to weigh word relationships dynamically, outperforming older methods in tasks like text summarisation. Systems like BERT or GPT-4 excel because they capture long-range dependencies and context more efficiently than traditional RNNs.

Can convolutional neural networks handle text-based challenges?

Though primarily used for images, CNNs apply filters to detect local patterns in text data. They work well for sentence classification or identifying key phrases, especially when combined with word embeddings like GloVe or FastText.

How do word embeddings improve language modelling?

Word embeddings convert words into dense vectors, capturing semantic meaning (e.g., “king” – “man” + “woman” ≈ “queen”). Techniques like Word2Vec or transformers’ tokenisation allow models to interpret synonyms, analogies, and contextual nuances in text.

What industries benefit most from NLP applications?

Sectors like healthcare use sentiment analysis for patient feedback, while finance employs machine translation for global trading. E-commerce platforms apply text classification for reviews, and customer service uses chatbots powered by sequence-to-sequence models.

Are encoder-decoder architectures still relevant with transformers?

Yes. While transformers dominate, encoder-decoder frameworks remain vital for tasks requiring input-output mapping, like document summarisation. Models like T5 or BART combine transformer layers with this structure for high-accuracy results.