Modern artificial intelligence systems rely on mathematical foundations to interpret complex information. At their core lie feature vectors – ordered collections of numerical values that transform real-world objects into formats digestible by algorithms. These structures serve as the universal language for statistical analysis and pattern recognition tasks.

Each vector represents an object through calculated measurements, with individual dimensions mapping to specific characteristics. Whether analysing pixels in an image or words in a document, this numerical representation enables machine learning models to identify trends and make predictions efficiently.

The simplicity of processing such data structures allows algorithms to perform tasks ranging from facial recognition to financial forecasting. By converting raw inputs into quantifiable arrays, systems can apply statistical methods similar to traditional techniques like linear regression.

Understanding these constructs is crucial for developing robust AI applications. They bridge the gap between abstract concepts and computational logic, forming the backbone of modern data-driven decision-making processes.

Introduction to Feature Vectors

Transforming diverse inputs into computational formats enables machines to recognise patterns. These structured numerical frameworks, known as feature vectors, quantify observable traits like human height or document word frequency. Each vector acts as a digital fingerprint, organising measurable attributes into consistent formats for algorithmic processing.

Definition and Relevance in AI

In practical terms, a feature vector represents an object through ordered numerical values. Consider health monitoring systems:

- Blood pressure (120/80 mmHg)

- Resting heart rate (72 bpm)

- Body mass index (23.7)

This standardised representation allows comparison across patients and populations. Modern systems process millions of such vectors daily, enabling real-time analysis in healthcare and finance.

Evolution in Machine Learning

Early statistical models used manual feature selection from structured data. Contemporary approaches automatically extract patterns from complex inputs:

| Era | Approach | Data Types | Complexity |

|---|---|---|---|

| 1950s-70s | Manual measurement | Structured tables | Low-dimensional |

| 2020s | AI-driven extraction | Multi-modal streams | High-dimensional |

This progression from simple spreadsheets to processing 4K video frames demonstrates how vectors have scaled with technological advances. Deep learning architectures now handle feature engineering autonomously, analysing relationships across hundreds of dimensions.

Understanding what are feature vectors in machine learning

Digital systems quantify observable properties through structured numerical formats. These ordered sets transform colours, sounds, and physical measurements into analysable patterns. Let’s examine the RGB model – a foundational example where hues become triplets like [255, 0, 128] for algorithmic processing.

Key Concepts and Numerical Representation

Practical implementations organise multiple feature vectors into design matrices. Consider measuring three individuals:

| Instance | Height (cm) | Weight (kg) |

|---|---|---|

| Person 1 | 175 | 68 |

| Person 2 | 182 | 75 |

| Person 3 | 168 | 62 |

Each row contains distinct numerical values representing one subject. Columns show specific traits across all entries. This structure enables efficient comparisons and mathematical operations.

Modern systems handle high-dimensional structured numerical formats effortlessly. A photograph’s pixel grid might contain millions of values, yet algorithms process these arrays similarly to basic spreadsheets. The dimensionality directly impacts model complexity – more features require sophisticated analysis techniques.

Quantification remains essential for pattern recognition. Unlike qualitative descriptions, numerical features provide measurable distances between data points. This precision allows accurate predictions in applications ranging from medical diagnostics to retail forecasting.

Applications and Functionalities in Pattern Recognition

Practical implementations of numerical representations power innovations across industries. These structured formats enable systems to detect meaningful relationships in visual, textual, and auditory data streams.

Image Processing and Object Recognition

Computer vision systems convert visual elements into measurable parameters. Edge detection algorithms might analyse gradient magnitudes, while colour intensity values help distinguish objects:

| Technique | Features Extracted | Common Use |

|---|---|---|

| Traditional | Grayscale values, basic shapes | Barcode scanning |

| Modern | Texture patterns, depth mapping | Autonomous vehicles |

This evolution allows facial recognition systems to achieve 99% accuracy in controlled environments. Neural networks process these numerical arrays to identify complex patterns beyond human perception.

Text Analysis and Spam Detection

Linguistic patterns become quantifiable through structured formats. Anti-spam systems evaluate multiple parameters:

- Word frequency distributions

- Header metadata patterns

- Geolocation discrepancies

One cybersecurity firm reports blocking 15 million malicious emails daily using these classification techniques. As noted by AI researcher Dr. Eleanor Whitmore:

“The real power lies in combining textual and metadata features – that’s where modern systems outpace traditional rule-based filters.”

Utilising Numerical Features in Data Analysis

Advanced applications extend to voice recognition and industrial monitoring. Sound engineers transform audio waves into spectral components:

| Data Type | Key Features | Analysis Technique |

|---|---|---|

| Speech | Frequency harmonics, pitch contours | Phoneme matching |

| Sensor readings | Vibration patterns, thermal gradients | Anomaly detection |

These processing methods enable predictive maintenance in manufacturing plants, reducing equipment downtime by 40% in recent UK trials.

Feature Engineering: Selection, Extraction and Data Representation

Transforming raw information into actionable insights requires meticulous data craftsmanship. This process combines algorithmic precision with human intuition to create representations that reveal hidden patterns.

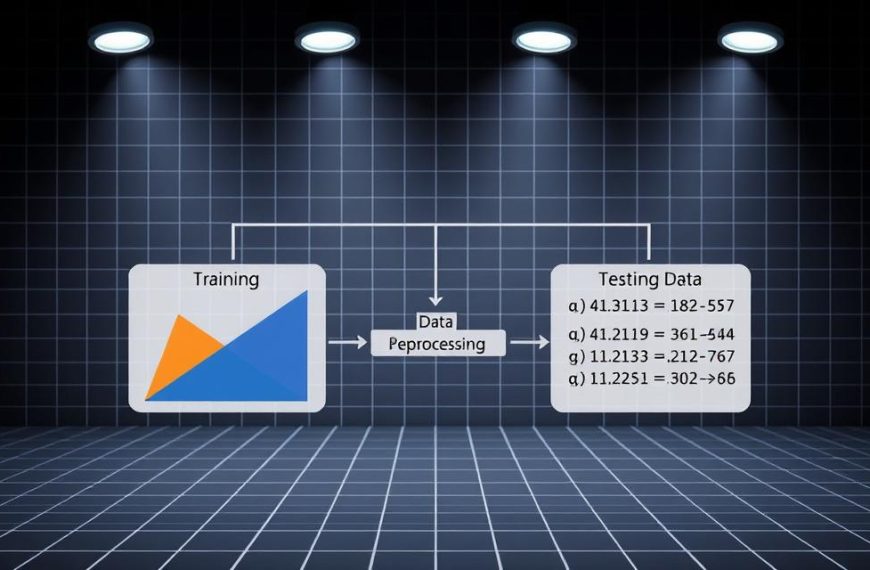

From Raw Data to Constructed Feature Vectors

Effective representation begins with strategic transformations. Healthcare analysts might calculate patient age from birth/death years, while e-commerce platforms derive purchase frequency from transaction histories. These constructed features often prove more informative than raw inputs.

Modern approaches balance automated techniques with domain knowledge:

| Method | Process | Use Case |

|---|---|---|

| Principal Component Analysis | Identifies orthogonal variance axes | Financial fraud detection |

| Expert-driven ratios | Combines existing measurements | Clinical risk scores |

Data scientists iteratively test combinations using learning algorithms. A retail dataset might trial 15+ feature variations before achieving optimal prediction accuracy. This trial-and-error approach prevents model overfitting while maintaining real-world relevance.

Challenges multiply with unstructured data types. Image recognition systems might process 100+ texture parameters, whereas text analysis tools track syntactic patterns across multilingual corpora. Successful implementations adapt extraction methods to each domain’s unique requirements.

Conclusion

Numerical representations form the backbone of intelligent systems across industries. These structured formats enable algorithms to process everything from medical scans to stock market trends with mathematical precision. Their adaptability ensures relevance in evolving fields like reinforcement learning and generative AI.

Effective feature engineering remains vital for model success. While automated systems excel at pattern detection, human domain expertise shapes meaningful interpretations of complex data. This synergy produces vectors that capture essential characteristics while reducing computational overhead.

Emerging techniques continue refining how systems handle high-dimensional information. Innovations in neural networks and transfer learning demonstrate particular promise for processing intricate gradient patterns. Such advancements maintain these numerical frameworks as indispensable tools in AI development.

From voice recognition to predictive maintenance, structured machine learning inputs drive technological progress. Their universal application across networks and platforms confirms their enduring role in transforming raw information into actionable intelligence.