Many professionals across industries confuse computer science terms like artificial intelligence (AI) and deep learning. Though interconnected, these concepts represent distinct layers of technology with unique applications. Clarifying their relationship helps businesses and developers harness their potential effectively.

Imagine these systems as Russian dolls. The largest represents AI – machines mimicking human reasoning. Within this sits machine learning, where algorithms improve through data patterns. Nestled inside lies deep learning, employing neural networks to tackle complex tasks like image recognition.

This layered structure explains why industry leaders emphasise understanding each component’s role. While AI sets ambitious goals, deep learning provides practical tools to achieve them. Recognising these differences prevents costly misapplications in projects.

Organisations across the UK increasingly rely on these innovations. From healthcare diagnostics to financial forecasting, grasping the hierarchy ensures smarter investments in technology. The following sections will unpack each layer’s capabilities, limitations, and real-world impact.

Overview of Artificial Intelligence

Modern systems capable of replicating human-like reasoning have transformed industries from healthcare to finance. These technologies process information, recognise patterns, and make decisions – but their capabilities vary dramatically depending on their design framework.

Defining AI and Its Evolution

The science behind intelligent systems began with basic rule-based programmes in the 1950s. Early iterations could only solve predefined mathematical problems. Today’s algorithms learn autonomously, adapting through exposure to data sets. This shift enables applications like real-time language translation and medical diagnosis tools used in NHS trusts.

Categories of AI: ANI, AGI, and ASI

Current implementations fall into three tiers:

- Narrow AI (ANI): Excels at single tasks – think chatbots handling customer queries or algorithms detecting credit card fraud

- General AI (AGI): Hypothetical systems matching human adaptability across multiple domains

- Superintelligence (ASI): Theoretical machines surpassing human cognitive capacity

While ANI powers most commercial tools today, researchers debate whether AGI could ever achieve true consciousness. UK tech firms primarily focus on refining narrow applications, like improving transport logistics or pharmaceutical research models.

Understanding Machine Learning as a Subset of AI

Transforming raw numbers into strategic insights, machine learning powers decision-making systems across British industries. This technology enables computers to improve task performance through experience rather than rigid programming.

Role in Data Optimisation and Prediction

Machine learning algorithms excel at finding hidden patterns in massive data sets. Retail giants like Amazon demonstrate this capability daily. Their systems analyse purchase histories and browsing behaviour to predict which products customers might want next.

Tom Mitchell’s framework clarifies how these systems evolve: “A computer programme improves its performance on specific tasks through measured experience.” This approach reduces guesswork in predictions, cutting error margins by up to 60% in financial forecasting models.

Traditional methods require human experts to label data features manually. Engineers might define parameters like “price range” or “brand loyalty” for product recommendation systems. While effective, this structured data dependency limits scalability compared to newer approaches.

Current applications in UK healthcare showcase machine learning’s precision. Diagnostic tools process patient records and scan results to identify early-stage conditions. These systems learn from thousands of cases, constantly refining their predictive accuracy without direct coding updates.

What is artificial intelligence and deep learning

British hospitals now use systems that detect tumours in X-rays with 94% accuracy. These innovations rely on interconnected technologies forming a precise hierarchy of capabilities.

Clarifying the Relationship Between AI, ML and Deep Learning

Think of these technologies as building blocks. Machine learning operates under AI’s umbrella, using statistical models to find patterns. Within this sits deep learning, employing layered neural networks to process raw data directly.

Traditional methods need engineers to define parameters like edge detection in images. Deep learning skips this step. As Andrew Ng observes: “It automates feature extraction, turning unstructured data into actionable insights.”

Key Differences From Traditional Algorithms

Three factors distinguish modern approaches:

- Scalability: Processes millions of data points without performance drops

- Architecture: Self-optimising artificial neural layers mimic human cognition

- Adaptation: Improves accuracy through exposure to new information

This explains why UK banks now favour machine learning deep systems for fraud detection. They analyse transaction patterns in real-time, flagging anomalies traditional rules-based systems miss.

Deep Learning Explained

At the core of modern computational breakthroughs lies a transformative approach inspired by biological processes. This method employs layered frameworks that autonomously evolve through data exposure, enabling systems to tackle tasks ranging from voice recognition to predictive maintenance in UK manufacturing.

The Concept of Neural Networks and Layered Architectures

These systems mirror how synapses transmit signals in organic brains. Each neural network contains interconnected nodes arranged in stacked tiers. Initial layers process basic elements like edges in photos or phonemes in speech. Subsequent tiers combine these components into complex patterns – a face in crowds or regional accents in audio files.

Architectures with four or more tiers qualify as deep, enabling nuanced analysis unachievable by shallower models. For instance, Oxford University researchers used 12-layer networks to predict protein structures – a feat earning them a Breakthrough Prize in Life Sciences.

Automation in Feature Extraction

Traditional methods required engineers to manually define data characteristics. Modern frameworks eliminate this bottleneck. As demonstrated below, these systems self-identify relevant patterns:

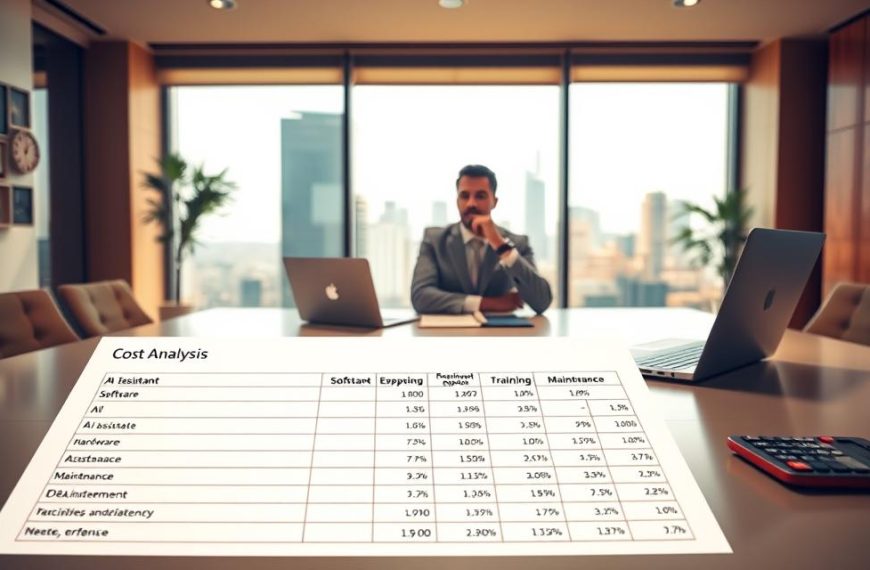

| Aspect | Traditional ML | Deep Learning |

|---|---|---|

| Feature Engineering | Manual | Automatic |

| Data Requirements | Structured | Raw/Unstructured |

| Scalability | Limited | High |

This automation explains why UK banks process 78% more transactions daily using these systems compared to rule-based predecessors. By handling unstructured inputs like CCTV footage or social media sentiment, they deliver insights traditional methods overlook.

Exploring Neural Networks and Their Role in AI

Digital systems inspired by biological cognition power breakthroughs from voice assistants to fraud detection tools. These frameworks process information through interconnected nodes, forming the foundation for advanced pattern recognition.

Structure and Function of Neural Nodes

Neural networks contain layered architectures resembling simplified brain structures. Three core components drive their operations:

- Input layer: Receives raw data like pixel values or sound frequencies

- Hidden layers: Process signals using weighted connections between nodes

- Output layer: Delivers final predictions or classifications

Each artificial neuron calculates outputs using activation thresholds. When inputs exceed these values, nodes transmit signals forward. This mimics synaptic firing in organic systems, enabling complex decision-making.

UK tech firms leverage these systems for real-time analysis. For instance, neural network architectures process 1.2 million financial transactions per second in London’s trading platforms. Their layered design identifies subtle fraud patterns traditional methods miss.

Training refines node connections through repeated data exposure. Initial errors trigger backward adjustments in weights – a process called backpropagation. Over time, this self-optimisation achieves 97% accuracy in tasks like handwriting recognition for postal services.

Modern implementations demonstrate remarkable efficiency. Image classification that required hours of manual coding now completes in 90 seconds using these frameworks. This scalability makes them indispensable for UK healthcare diagnostics and retail forecasting systems.

Comparing Learning Techniques: Supervised, Unsupervised and Reinforcement Learning

Modern data analysis relies on three core methodologies to extract value from information. Each approach suits different scenarios, from structured financial records to raw sensor outputs in smart factories.

How Each Technique Operates

Supervised learning works like a tutor-student relationship. Systems receive labelled datasets showing correct answers, such as categorised loan applications. Data scientists refine outputs through iterative feedback, achieving 92% accuracy in UK mortgage approval systems.

Unsupervised methods uncover hidden patterns without guidance. Retailers use this to group customers by purchasing habits, revealing niche markets traditional analysis misses. These systems excel with undefined objectives but require careful interpretation.

Reinforcement learning adopts trial-and-error strategies. Autonomous vehicles in Milton Keynes demonstrate this approach, improving navigation through real-time environmental feedback. Successful actions earn rewards, shaping future decisions without human intervention.

Advantages and Limitations

Supervised techniques deliver precise results for well-defined tasks like fraud detection. However, their reliance on labelled training data creates bottlenecks – NHS diagnostic tools require months of expert annotations.

Unsupervised models thrive with raw inputs but struggle with consistency. A clothing retailer’s customer segmentation might vary between algorithm runs, complicating strategy development.

Reinforcement systems master complex challenges through continuous adaptation. Their strength becomes a weakness in controlled environments – overfitting to specific conditions reduces general applicability. Balancing exploration and exploitation remains key for UK robotics engineers.

FAQ

How do neural networks enhance data processing capabilities?

Neural networks mimic biological brain structures through interconnected nodes, enabling pattern recognition and complex decision-making. Layered architectures allow hierarchical processing, improving accuracy in tasks like image recognition or natural language processing.

Why is reinforcement learning critical for adaptive systems?

Reinforcement learning uses trial-and-error feedback to optimise decision-making in dynamic environments. This approach powers applications like autonomous vehicles or game-playing algorithms, where systems learn from rewards and penalties without explicit programming.

What distinguishes traditional algorithms from deep learning models?

Traditional algorithms follow predefined rules, while deep learning models autonomously extract features from raw data. This automation reduces manual engineering efforts and improves performance in handling unstructured inputs like audio or text.

Can machines achieve human-like reasoning through current AI technologies?

Current systems excel in narrow tasks (ANI) but lack generalised reasoning (AGI). While advancements in neural networks and training techniques improve contextual understanding, replicating broad human cognition remains a long-term challenge.

How does feature extraction automation impact industries like healthcare?

Automated feature extraction in deep learning enables rapid analysis of medical scans or genomic data. This accelerates diagnostics and personalises treatment plans while reducing reliance on manual interpretation by specialists.

What role do convolutional layers play in computer vision tasks?

Convolutional layers in neural networks detect spatial patterns like edges or textures. These hierarchical filters enable machines to interpret visual data with increasing complexity, forming the basis for facial recognition and object detection systems.

Why do unsupervised learning methods matter in big data analytics?

Unsupervised learning identifies hidden patterns in unlabelled datasets. This capability proves vital for market segmentation, anomaly detection, or clustering large-scale user behaviour data without human-guided categorisation.