Mastering machine learning often feels like solving a puzzle with missing pieces. The field combines mathematical rigour, programming precision, and data science expertise, creating a steep climb for newcomers. While basic concepts like linear regression seem approachable, advanced frameworks demand specialised knowledge in calculus, statistics, and software design.

One core issue lies in its multidisciplinary nature. Success requires blending skills from mathematics, computer science, and domain-specific industries. For example, building a recommendation system involves probability theory and coding proficiency. This fusion of disciplines creates a unique barrier for those starting out.

Despite these hurdles, foundational techniques remain accessible. Beginners can achieve tangible results using open-source libraries without deep theoretical knowledge. Tools like Python’s Scikit-learn simplify tasks like classification or clustering, offering practical entry points.

The complexity escalates with advanced projects. Neural networks and deep learning architectures require understanding optimisation algorithms and hardware limitations. Such demands explain why practitioners often face a steep learning curve when transitioning from basics to cutting-edge applications.

Recognising these challenges helps set realistic goals. Structured learning paths and patience become essential. By prioritising core concepts first, even those new to the field can navigate its complexities effectively.

Introduction to Machine Learning

Artificial intelligence’s most dynamic branch allows systems to evolve through experience rather than rigid instructions. This capability powers everything from personalised shopping recommendations to fraud detection systems. Let’s explore its core principles and typical obstacles newcomers face.

What Is Machine Learning?

At its heart, this discipline focuses on creating algorithms that detect patterns in data. These patterns enable predictions or decisions without explicit programming. For instance, email filters improve automatically by analysing which messages users mark as spam.

Two primary approaches dominate the field:

| Type | Data Structure | Common Uses |

|---|---|---|

| Supervised Learning | Labelled examples | Price prediction, spam detection |

| Unsupervised Learning | Unlabelled data | Customer segmentation, anomaly detection |

Common Challenges for Beginners

Newcomers often feel overwhelmed by the sheer variety of models available. Choosing between decision trees, neural networks, or clustering techniques requires understanding each method’s strengths. Many struggle to match algorithms with their project’s specific data characteristics.

Another hurdle involves balancing theory with practice. While libraries like Scikit-learn simplify implementation, grasping underlying mathematical concepts remains crucial. Allocating sufficient time for both coding exercises and theoretical study proves vital for long-term success.

Foundational Mathematics for Machine Learning

Quantitative skills form the backbone of effective model development in data-driven fields. Three core disciplines – linear algebra, calculus, and probability theory – provide the scaffolding for understanding algorithmic behaviour. Without these fundamentals, interpreting how models process information becomes akin to navigating without a compass.

Linear Algebra and Calculus

Matrix operations govern how algorithms transform input data into actionable outputs. Techniques like eigenvalue decomposition power dimensionality reduction in principal component analysis. Vector spaces underpin neural network architectures, dictating how layers process sequential information.

Differential calculus drives optimisation processes through gradient descent. This method adjusts model parameters by calculating error derivatives – a non-negotiable skill for tuning performance. Mastery here separates functional implementations from theoretically sound solutions.

Statistics and Probability Essentials

Probability distributions quantify uncertainty in predictions, from Gaussian curves to Poisson processes. Bayes’ theorem enables systems to update beliefs as new evidence emerges. These concepts prove vital when evaluating model reliability in real-world scenarios.

Statistical inference techniques like hypothesis testing validate whether results stem from patterns or random chance. Practitioners use these tools to assess metrics such as precision-recall trade-offs. Solid statistics knowledge transforms raw outputs into defensible conclusions.

While the mathematical demands appear intense, targeted revision of school-level maths builds confidence. Pairing theory with coding exercises in Python bridges abstract principles to tangible implementations. This dual approach demystifies complex formulae through practical experimentation.

Data Analysis and Visualisation Techniques

The journey from raw numbers to actionable insights hinges on systematic data analysis and visual storytelling. Practitioners spend up to 80% of their time cleaning and structuring data sets, making these skills indispensable for reliable outcomes.

Understanding and Preparing Data Sets

Effective analysis begins with loading and inspecting information. Common challenges include missing values, duplicate entries, and inconsistent formatting. Tools like Pandas simplify these steps through functions that automate column alignment and outlier detection.

Critical preparation tasks include:

| Step | Tool | Purpose |

|---|---|---|

| Missing Value Handling | Scikit-learn | Impute gaps using median/mode |

| Feature Scaling | NumPy | Normalise numerical ranges |

| Categorical Encoding | Category Encoders | Convert text to numerical values |

Effective Visualisation Approaches

Charts transform abstract data into digestible narratives. Heatmaps reveal variable correlations, while scatter plots highlight clustering patterns. Interactive dashboards using Plotly allow real-time exploration of multi-dimensional data sets.

Key principles for impactful visuals:

- Use colour gradients to represent intensity

- Limit pie charts to 5 categories maximum

- Annotate outliers with contextual notes

Programming Basics and Tools

Digital solutions demand proficiency in key programming languages. Whether developing algorithms or cleaning data sets, coding skills enable practitioners to translate theoretical concepts into functional software. This foundation proves particularly vital when working with large-scale data systems.

Essential Languages for Technical Work

Python dominates data science due to its intuitive syntax and specialised libraries like TensorFlow. Developers appreciate its versatility in handling tasks from database management to neural network design. However, other languages complement Python in professional environments:

| Language | Primary Use | Key Advantage |

|---|---|---|

| R | Statistical analysis | Advanced visualisation tools |

| C++ | High-performance computing | Memory efficiency |

| Java | Enterprise systems | Platform independence |

Mastering multiple tools allows professionals to select optimal solutions for specific computer tasks. For instance, C++ often underpins real-time trading algorithms, while Java maintains popularity in banking systems.

Structured learning pathways accelerate competency development. Many UK-based training programmes combine Python fundamentals with practical projects involving Pandas and NumPy. Bootcamps frequently include collaborative work simulating industry scenarios.

Beyond syntax, effective programmers understand debugging techniques and algorithm optimisation. Regular practice with platforms like LeetCode builds problem-solving skills crucial for complex implementations. This hands-on approach bridges theoretical knowledge and production-ready code.

Selecting the Right Machine Learning Framework

Choosing technical tools shapes both immediate results and future scalability in data projects. With numerous open-source options available, developers must balance ease of use with long-term functionality. Strategic selection prevents wasted effort and aligns software capabilities with project goals.

Overview of Popular Libraries

Python’s ecosystem offers specialised tools for distinct tasks. NumPy accelerates numerical computations through array processing, while Pandas simplifies data cleaning with DataFrame structures. Scikit-learn provides pre-built algorithms for classification and regression tasks, reducing coding overhead.

Consider these primary use cases:

- NumPy: Matrix operations & mathematical functions

- Pandas: Dataset manipulation & time-series analysis

- Scikit-learn: Implementing supervised/unsupervised models

Starter Toolkits for Beginners

Newcomers benefit from software with intuitive interfaces and robust documentation. Google Colab provides a cloud-based environment preloaded with essential libraries, eliminating setup hassles. For structured learning, Kaggle’s micro-courses integrate hands-on practice with real datasets.

Key selection factors include:

- Community support quality

- Error message clarity

- Pre-built template availability

Starting simple allows gradual skill development. Beginners often find success focusing on one toolkit before exploring advanced features. This approach builds confidence while delivering tangible results early in the learning journey.

Supervised and Unsupervised Learning Models

Data-driven decision-making relies heavily on two core methodologies: supervised and unsupervised learning models. These approaches dictate how systems interpret patterns, with each suited to specific data scenarios. Their availability in R and Python democratises access, allowing practitioners to tackle complex tasks using freely available tools.

Supervised models thrive on labelled datasets where input-output pairs guide predictions. Linear regression analyses continuous variables like house prices, while logistic regression handles binary outcomes such as spam detection. More advanced algorithms like random forests manage intricate relationships through ensemble techniques.

| Approach | Data Type | Common Algorithms |

|---|---|---|

| Supervised | Labelled | Linear regression, SVM |

| Unsupervised | Unlabelled | K-means, PCA |

Unsupervised techniques uncover hidden structures without predefined answers. Clustering algorithms group customers by purchasing behaviour, while dimensionality reduction simplifies visualisation tasks. These methods excel in exploratory analysis where relationships aren’t immediately obvious.

Despite their differences, both approaches share practical accessibility. Python’s Scikit-learn library implements logistic regression in under 10 lines of code. Similarly, R’s cluster package simplifies grouping analyses for marketing teams. This ease lowers entry barriers for professionals across sectors.

Choosing between methodologies depends on problem framing and data availability. Supervised models require historical labels for training, whereas unsupervised techniques work with raw datasets. Prioritising clarity in objectives ensures optimal algorithm selection and resource allocation.

Why Machine Learning is Hard

Developing robust AI systems demands expertise across multiple technical domains. Success requires merging advanced mathematics with software engineering principles – a combination few fields demand simultaneously. This multidisciplinary foundation explains why even seasoned professionals face hurdles when deploying production-ready solutions.

Advanced frameworks rely on intricate mathematical concepts like gradient optimisation and probabilistic modelling. Translating these theories into functional code tests both analytical thinking and programming discipline. One miscalculation in loss functions or matrix operations can derail entire projects.

Scaling prototypes introduces another layer of complexity. Software engineering practices become critical when managing data pipelines or optimising model latency. Teams must reconcile rapid experimentation with maintainable architecture – a balance requiring cross-functional collaboration.

Debugging presents unique problems compared to traditional development. Issues might stem from skewed training data, unsuitable algorithms, or hardware limitations. Unlike conventional code errors, these flaws often manifest as gradual performance degradation rather than immediate crashes.

The field’s rapid evolution compounds these challenges. New architectures and libraries emerge monthly, demanding continuous skill updates. Professionals must prioritise lifelong learning while maintaining core competencies in statistics and system design.

Overcoming Common Data Challenges

Raw datasets often resemble unfinished jigsaws – pieces scattered, edges rough. Transforming these fragments into actionable insights demands meticulous preparation. Success hinges on systematic approaches to data cleansing and structuring, processes that consume 70-80% of project time in typical workflows.

Data Cleaning and Preparation Strategies

Initial steps involve diagnosing quality issues. Missing values, duplicate entries, and inconsistent formats top the list of culprits skewing results. As one data engineer notes: “Clean data doesn’t guarantee success, but dirty data ensures failure.”

| Challenge | Tool | Resolution |

|---|---|---|

| Missing Values | Pandas | Impute using median/mode |

| Outlier Detection | Scikit-learn | Interquartile range analysis |

| Format Standardisation | Regular Expressions | Pattern-based correction |

Large datasets demand workflows optimised for memory constraints. Techniques like chunk processing prevent system crashes during analysis. Iterative cleaning cycles reveal hidden issues, requiring flexible adjustments.

Balancing thoroughness with efficiency remains critical. Over-engineering preprocessing steps delays projects, while hasty fixes compromise model accuracy. Strategic prioritisation of high-impact issues streamlines training readiness.

Algorithmic Complexity and Model Training

Navigating algorithmic complexity requires balancing precision with computational efficiency. Modern systems rely on intricate mathematical relationships between variables, demanding meticulous attention to detail. Success hinges on grasping both theoretical concepts and practical implementation trade-offs.

Understanding Algorithm Functionality

Algorithms work by transforming inputs through sequential operations governed by statistical principles. Their effectiveness depends on selecting appropriate parameters like learning rates or regularisation weights. Misconfigurations here lead to models that either underperform or consume excessive time and resources.

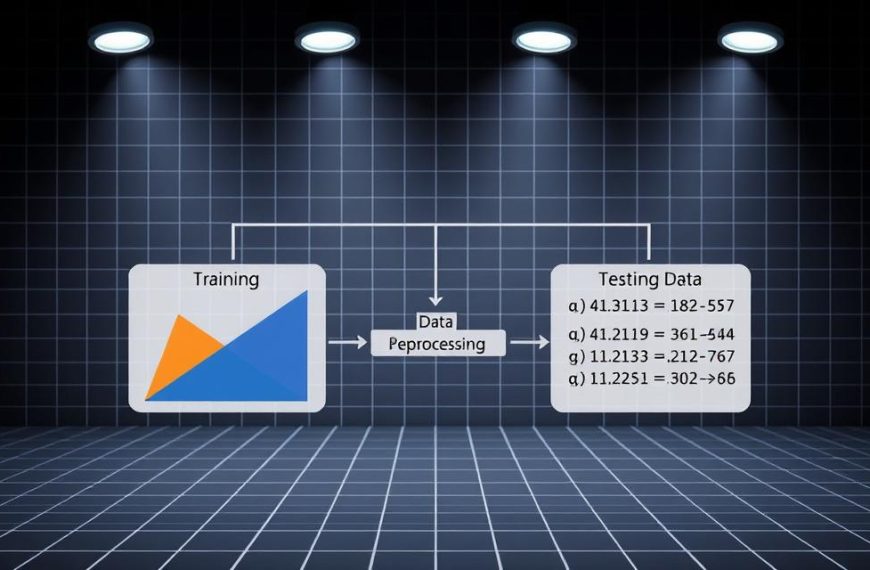

Techniques for Effective Training

Streamlined training processes begin with data normalisation and feature engineering. Techniques like cross-validation prevent overfitting by testing models against unseen samples. Hardware acceleration tools (e.g., GPU clusters) reduce computation time for large datasets.

Mastering these elements enables practitioners to optimise model performance systematically. Regular benchmarking against baseline algorithms provides tangible progress metrics. This structured approach transforms abstract complexity into manageable workflows.